Featured Projects

Explore our groundbreaking research projects in immersive technologies, from therapeutic interventions to adaptive learning environments.

Lexi Learn

Advancing Adaptive Literacy Learning in Immersive Environments

Research Team: Neon Cao (Developer), Lucas Yeykelis (Developer), Nasir Grant (UI/UX Designer), Dan Zahal (Audio Engineer), Chris Edgar (Project Manager), Bryson Rudolph (Lab Manager, VESEL) and Marie Holm (Visiting Research Scholar, Haptics).

Lexi Learn is a research project exploring how immersive virtual environments (IVEs) and artificial intelligence (AI) can be integrated into early literacy education through multisensory and adaptive design. Developed as a literacy-focused educational game, Lexi Learn transforms reading and spelling into an interactive and exploratory experience. Within a calm, nature-inspired virtual environment, learners engage in playful literacy tasks that combine movement, sound, and visual association to reinforce phonetic understanding and word recognition.

Grounded in design-based and co-creation methodologies and research, the project brings together expertise from design, psychology, and education to examine how play experiences can foster motivation, engagement, and literacy skills. The integration of adaptive and generative AI scaffolding allows the learning environment to respond dynamically to each learner's pace and progress, supporting differentiated and inclusive instruction.

By merging play-based learning principles with technological innovation, Lexi Learn contributes to emerging knowledge on how multisensory and adaptive educational tools can enhance literacy development. The project aims to inform future pedagogical practices and guide the responsible integration of immersive technologies into primary education in ways that are equitable, engaging, and human-centered.

REboot

Mobile Game for Therapeutic Parent-Child Play

A number of in-person therapeutic modalities—such as Child Parent Relationship Therapy, Filial Play Therapy, and Theraplay—use parent-child play as the primary mechanism for healing and connection. REboot builds on these foundations by developing a mobile game designed to facilitate therapeutic play between parents and children.

By designing the intervention for mobile, we aim to create a tool that is scalable, accessible, and enjoyable. REboot takes the form of a digital board game: players roll a virtual die to move tokens along a path, collecting points and unlocking bonus items that can be traded for in-game rewards. Between rounds, parent-child dyads are prompted to engage in short, off-screen mini games—each carefully selected and reviewed by a panel of play therapists to enhance specific aspects of the parent-child relationship.

The project currently has a working prototype and is actively recruiting participants to test its effectiveness. Please contact Justin Jacobson at jdj108@miami.edu

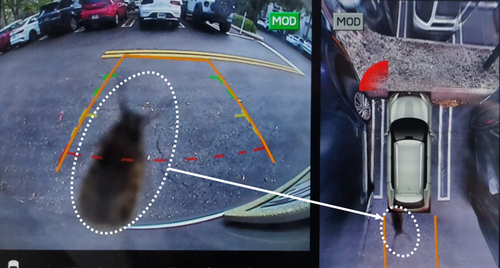

AV Perception Safety Testing

Testing Autonomous Vehicle Resilience to Perception Failures

Autonomous vehicle (AV) safety relies on the integrity of its perception systems. However, these systems are vulnerable to non-obvious failures, including perception failures, called as hallucinations. This creates a critical and often unmeasured safety gap, as these unpredictable hallucinations are not addressed by conventional verification and validations methods, which often assume a perfect or near-perfect perception stack.

This project introduces a novel component-agnostic simulation framework designed to systematically test AV resilience to these failures. Our methodology intercepts the data flow between the AV's perception and planning modules, allowing us to inject realistic hallucinations directly into the system's decision-making process. We create a controlled and repeatable test scenarios that force the AV to react to perception failures, a process impractical or too dangerous to replicate in the real world.

The experiments demonstrate that even simple phantom objects can cascade through the system, causing the AV's control system to execute dangerous and unnecessary maneuvers that lead to collisions and near-collisions. The framework provides a foundational methodology to enable developers to identify AV perception vulnerabilities before deployment.

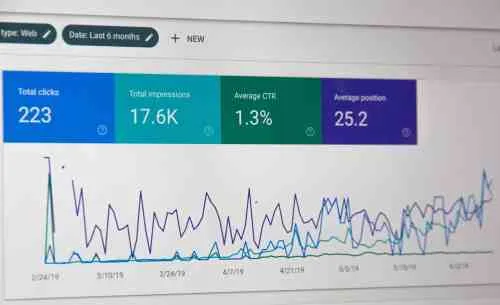

Digital Experiences for Behavior Activation

Adaptive AI Coaching for Enhanced Cycling Performance

Psychological interventions can significantly boost endurance performance, with studies showing improvements of 2–8%; yet, current coaching methods often fail to deliver support when it's most effective for the individual. Traditional approaches rely on static, fixed-interval cues or self-initiated strategies, ignoring the rider's unique physiological state and moment-to-moment effort perception—the core limiting factor in endurance, according to the psychobiological model of performance.

Our project addresses this critical gap by developing an adaptive AI coaching system that learns precisely when motivational support will be most effective for each individual cyclist. This innovative system uses machine learning to analyze real-time physiological data (like heart rate and power output patterns) and deliver personalized verbal affirmations at the optimal moment, aiming to reduce perceived exertion and increase the motivation to tolerate discomfort.

This study's primary objective is to evaluate whether these adaptive, AI-delivered personalized affirmations can enhance indoor cycling power output during a 20-minute time trial compared to static-affirmation and exercise-only control groups. Specifically, we will compare the effectiveness of adaptive vs. static affirmations on key cycling performance metrics, examine the relationship between physiological markers and optimal affirmation timing, and assess the impact on the cyclists' perceived exertion and motivational states.

This research is an important step in applying digital media and adaptive machine learning to real-time exercise coaching. If successful, this technology could be seamlessly integrated into commercial cycling apps and smart trainers, democratizing access to highly personalized, effective coaching for people worldwide.

RADIOACTIVE – Radiotherapy Patient Education with Virtual Reality

Immersive Storytelling for Oncology Patient Preparation

This research explores the application of immersive technologies to improve patient understanding and emotional readiness for complex medical procedures. With a focus on oncology patients undergoing radiation therapy, this line of work investigates how immersive storytelling and virtual hospital walkthroughs can demystify the treatment environment and alleviate pre-treatment anxiety.

By incorporating first-person narratives and visual simulations, patients can experience a realistic preview of the radiotherapy process before their actual treatment begins. This approach allows them to familiarize themselves with the treatment room, equipment, and procedures in a safe, controlled virtual environment.

The research aims to reduce patients' anxiety, increase treatment comprehension, and ultimately enhance patient satisfaction. By leveraging the unique capabilities of virtual reality to create presence and emotional engagement, this project contributes to the broader goal of using immersive technologies to improve transparency, trust, and psychological well-being in healthcare communication.

Radiotherapy Patient Education with Augmented Reality for Post-Treatment Recovery

AR Virtual Mirror for Recovery Visualization

This project utilizes augmented reality (AR) to enhance patient and caregiver understanding of post-radiotherapy recovery. By implementing a virtual mirror interface, the system overlays visual representations of treatment areas and their expected toxicity levels over time.

Patients can visualize how specific regions of their body will respond and recover after treatment, offering a personalized and intuitive view of their healing process. This innovative approach transforms abstract medical information into tangible, easy-to-understand visual feedback that patients can explore at their own pace.

The primary aim is to empower patients by improving their mental preparedness, fostering resilience, and building confidence during the recovery phase. Additionally, the AR system serves as an educational tool for family members and caregivers, helping them better understand the patient's experience and how to provide appropriate support.

This project supports the broader goal of using immersive technologies to improve transparency, trust, and psychological well-being in healthcare communication. By bridging the gap between clinical information and patient understanding, the AR virtual mirror represents a significant advancement in patient-centered care.